To shade a point you locate it's position in the light volume, sample the colour and intensity values and based on the point's normal, shade it according to the incoming light intensity. The example I used to do this is as follows.

float intensity = dot(saturate(normal),posLuminence) + dot(saturate(-normal),negLuminence);

Although when I implemented that I got this for a scene with equal intensity from all directions. The thin darker bands show the correct intensity but too much light is accumulating where the intensity is interpolated.

You'd expect an even intensity across all surfaces so I'd definitely messed up somewhere. I remembered that Half Life 2 used a similar ambient cube lighting system so I looked into how they were doing things and found a few hints here, in section 8.4.1.

Based on that I ended up with the following solution.

Input.Normal = normalize(Input.Normal);

float3 originalSign = sign(Input.Normal);

float3 nSquared = Input.Normal * Input.Normal;

float3 modifiedNormal = nSquared * originalSign;

float intensity = dot(saturate(modifiedNormal),posLum) + dot(saturate(-modifiedNormal),negLum);

And the result?

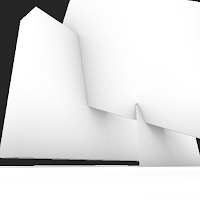

That seems to have done the trick. I now get much smoother results without artifacts. Also, an equal intensity from all directions now produces a completely flat shaded surface as you'd expect.

Note: The images and code in this post are all based on experiments in 3DS Max. I created a maxscript which baked the lighting results to vertex colours. I normally resort to this method when I get completely stuck :)

Note: The images and code in this post are all based on experiments in 3DS Max. I created a maxscript which baked the lighting results to vertex colours. I normally resort to this method when I get completely stuck :)